Quantum electrodynamics (QED) is the relativistic quantum field theory of electrodynamics. QED was developed by a number of physicists, beginning in the late 1920s. It basically describes how light and matter interact. QED mathematically describes all phenomena involving electrically charged particles interacting by means of exchange of photons. Physicist Richard Feynman has called it "the jewel of physics" for its extremely accurate predictions of quantities like the anomalous magnetic moment of the electron, and the Lamb shift of the energy levels of hydrogen.[1]

In technical terms, QED can be described as a perturbation theory of the electromagnetic quantum vacuum.

The word 'quantum' is Latin, meaning "how much" (neut. sing. of quantus "how great").[2] The word 'electrodynamics' was coined by André-Marie Ampère in 1822.[3] The word 'quantum', as used in physics, i.e. with reference to the notion of count, was first used by Max Planck, in 1900 and reinforced by Einstein in 1905 with his use of the term light quanta.

Quantum theory began in 1900, when Max Planck assumed that energy is quantized in order to derive a formula predicting the observed frequency dependence of the energy emitted by a black body. This dependence is completely at variance with classical physics. In 1905, Einstein explained the photoelectric effect by postulating that light energy comes in quanta, later called photons. In 1913, Bohr invoked quantization in his proposed explanation of the spectral lines of the hydrogen atom. In 1924, Louis de Broglie proposed a quantum theory of the wave-like nature of subatomic particles. The phrase "quantum physics" was first employed in Johnston's Planck's Universe in Light of Modern Physics. These theories, while they fit the experimental facts to some extent, were strictly phenomenological: they provided no rigorous justification for the quantization they employed.

Modern quantum mechanics was born in 1925 with Werner Heisenberg's matrix mechanics and Erwin Schrödinger's wave mechanics and the Schrödinger equation, which was a non-relativistic generalization of de Broglie's (1925) relativistic approach. Schrödinger subsequently showed that these two approaches were equivalent. In 1927, Heisenberg formulated his uncertainty principle, and the Copenhagen interpretation of quantum mechanics began to take shape. Around this time, Paul Dirac, in work culminating in his 1930 monograph finally joined quantum mechanics and special relativity, pioneered the use of operator theory, and devised the bra-ket notation widely used since. In 1932, John von Neumann formulated the rigorous mathematical basis for quantum mechanics as the theory of linear operators on Hilbert spaces. This and other work from the founding period remains valid and widely used.

Quantum chemistry began with Walter Heitler and Fritz London's 1927 quantum account of the covalent bond of the hydrogen molecule. Linus Pauling and others contributed to the subsequent development of quantum chemistry.

The application of quantum mechanics to fields rather than single particles, resulting in what are known as quantum field theories, began in 1927. Early contributors included Dirac, Wolfgang Pauli, Weisskopf, and Jordan. This line of research culminated in the 1940s in the quantum electrodynamics (QED) of Richard Feynman, Freeman Dyson, Julian Schwinger, and Sin-Itiro Tomonaga, for which Feynman, Schwinger and Tomonaga received the 1965 Nobel Prize in Physics. QED, a quantum theory of electrons, positrons, and the electromagnetic field, was the first satisfactory quantum description of a physical field and of the creation and annihilation of quantum particles.

QED involves a covariant and gauge invariant prescription for the calculation of observable quantities. Feynman's mathematical technique, based on his diagrams, initially seemed very different from the field-theoretic, operator-based approach of Schwinger and Tomonaga, but Freeman Dyson later showed that the two approaches were equivalent. The renormalization procedure for eliminating the awkward infinite predictions of quantum field theory was first implemented in QED. Even though renormalization works very well in practice, Feynman was never entirely comfortable with its mathematical validity, even referring to renormalization as a "shell game" and "hocus pocus". (Feynman, 1985: 128)

QED has served as the model and template for all subsequent quantum field theories. One such subsequent theory is quantum chromodynamics, which began in the early 1960s and attained its present form in the 1975 work by H. David Politzer, Sidney Coleman, David Gross and Frank Wilczek. Building on the pioneering work of Schwinger, Peter Higgs, Goldstone, and others, Sheldon Glashow, Steven Weinberg and Abdus Salam independently showed how the weak nuclear force and quantum electrodynamics could be merged into a single electroweak force.

Physical interpretation of QED

In classical optics, light travels over all allowed paths and their interference results in Fermat's principle. Similarly, in QED, light (or any other particle like an electron or a proton) passes over every possible path allowed by apertures or lenses. The observer (at a particular location) simply detects the mathematical result of all wave functions added up, as a sum of all line integrals. For other interpretations, paths are viewed as non physical, mathematical constructs that are equivalent to other, possibly infinite, sets of mathematical expansions. Similar to the paths of nonrelativistic Quantum mechanics, the different configuration contributions to the evolution of the Quantum field describing light do not necessarily fulfill the classical equations of motion. So according to the path formalism of QED, one could say light can go slower or faster than c, but will travel at velocity c on average[4].

Physically, QED describes charged particles (and their antiparticles) interacting with each other by the exchange of photons. The magnitude of these interactions can be computed using perturbation theory; these rather complex formulas have a remarkable pictorial representation as Feynman diagrams. QED was the theory to which Feynman diagrams were first applied. These diagrams were invented on the basis of Lagrangian mechanics. Using a Feynman diagram, one decides every possible path between the start and end points. Each path is assigned a complex-valued probability amplitude, and the actual amplitude we observe is the sum of all amplitudes over all possible paths. The paths with stationary phase contribute most (due to lack of destructive interference with some neighboring counter-phase paths) — this results in the stationary classical path between the two points.

QED doesn't predict what will happen in an experiment, but it can predict the probability of what will happen in an experiment, which is how (statistically) it is experimentally verified. Predictions of QED agree with experiments to an extremely high degree of accuracy: currently about 10−12 (and limited by experimental errors); for details see precision tests of QED. This makes QED one of the most accurate physical theories constructed thus far.

Near the end of his life, Richard P. Feynman gave a series of lectures on QED intended for the lay public. These lectures were transcribed and published as Feynman (1985), QED: The strange theory of light and matter, a classic non-mathematical exposition of QED from the point of view articulated above.

A simple but detailed description of QED, on the lines of Feynman's book

The key components of Feynman's presentation of QED are three basic actions.

- A photon goes from one place and time to another place and time.

- An electron goes from one place and time to another place and time.

- An electron emits or absorbs a photon at a certain place and time.

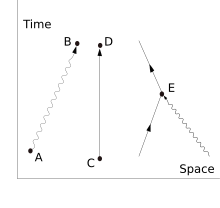

These actions are represented in a form of visual shorthand by the three basic elements of Feynman diagrams: a wavy line, a straight line and a junction of two straight lines and a wavy one. These may all be seen in the adjacent diagram.

It is important not to over-interpret these diagrams. Nothing is implied about how a particle gets from one point to another. The diagrams do not imply that the particles are moving in straight or curved lines. They do not imply that the particles are moving with fixed speeds. The fact that the photon is often represented, by convention, by a wavy line and not a straight one does not imply that it is thought that it is more wavelike than is an electron. The images are just symbols to represent the actions above: photons and electrons do, somehow, move from point to point and electrons, somehow, emit and absorb photons. We do not know how these things happen, but the theory tells us about the probabilities of these things happening. With the help of modern graphics software as opposed to blackboard and chalk, it would probably be better for a beginner to be presented with fuzzy lines to avoid the natural assumption that physics regards particles as moving along trajectories, like bullets.

As well as the visual shorthand for the actions Feynman introduces another kind of shorthand for the numerical quantities which tell us about the probabilities. If a photon moves from one place and time - in shorthand, A - to another place and time - shorthand, B - the associated quantity is written in Feynman's shorthand as P(A to B). The similar quantity for an electron moving from C to D is written E(C to D). The quantity which tells us about the probability for the emission or absorption of a photon he calls 'j'. This is related to, but not the same as, the measured electron charge 'e'.

Now the theory of QED is based on the assumption that complex interactions of many electrons and photons can be represented by fitting together a suitable collection of the above three building blocks, and then using the probability-quantities to calculate the probability of any such complex interaction. It turns out that the basic idea of QED can be communicated while making the assumption that the quantities mentioned above are just our everyday probabilities. (A simplification of Feynman's book.) Later on this will be corrected to include specifically quantum mathematics, following Feynman.

The basic rules of probabilities that will be used are that a) if an event can happen in a variety of different ways then its probability is the SUM of the probabilities of the possible ways and b) if a process involves a number of independent subprocesses then its probability is the PRODUCT of the component probabilities.

Here is an example to show how things work. Suppose we start with one electron at a certain place and time (this place and time being given the arbitrary label A) and a photon at another place and time (given the label B). Then we ask, 'What is the probability of finding an electron at C (another place and a later time) and a photon at D (yet another place and time). The simplest process to achieve this end is for the electron to move from A to C (an elementary action) and that the photon moves from B to D (another elementary action). From a knowledge of the probabilities of each of these subprocesses - E(A to C) and P(B to D) - then we would expect to calculate the probability of both happening by multiplying them, using rule b) above. This gives a simple estimated answer to our question.

But there are other ways in which the end result could come about. The electron might move to a place and time E where it absorbs the photon; then move on before emitting another photon at F; then move on to C where it is detected, while the new photon moves on to D. The probability of this complex process can again be calculated by knowing the probabilities of each of the individual actions: three electron actions, two photon actions and two vertices - one emission and one absorption. We would expect to find the total probability by multiplying the probabilities of each of the actions, for any chosen positions of E and F. We then, using rule a) above, have to add up all these probabilities for all the alternatives for E and F. (This is not elementary in practice, and involves integration.) But there is another possibility: that is that the electron first moves to G where it emits a photon which goes on to D, while the electron moves on to H, where it absorbs the first photon, before moving on to C. Again we can calculate the probability of these possibilities (for all points G and H). We then have a better estimation for the total probability by adding the probabilities of these two possibilities to our original simple estimate. Incidentally the name given to this process of a photon interacting with an electron in this way is Compton Scattering.

Do we stop with those diagrams? No, there are an infinite number of other intermediate processes in which more and more photons are absorbed and/or emitted. Associated with each of these possibilities there will be a Feynman diagram which helps us to keep track of them. Clearly there is going to be a lot of computing involved in calculating the resulting probabilities, but provided it is the case that the more complicated the diagram the less it contributes to the result, it is only a matter of time and effort to find as accurate an answer as you want to the original question. And that is the basic approach of QED. To calculate the probability of ANY interactive process between electrons and photons it is a matter of first noting, with Feynman diagrams, all the possible ways in which the process can be constructed from the three basic elements. Each diagram involves some calculation involving definite rules to find the associated probability. By adding the probabilities of each diagram we can find the total probability.

That basic scaffolding remains when we move to a quantum description. But there are a number of important detailed changes. The first is that whereas we might expect in our everyday life that there would be some constraints on the points to which a particle can move, that is NOT true in full Quantum Electrodynamics. There is a certain possibility of an electron or photon at A moving as a basic action to any other place and time in the universe!. That includes places that could only be reached at speeds greater than that of light and also earlier times. (An electron moving backwards in time can be viewed as a positron moving forward in time.)

The second important change has to do with the probability quantities. It has been found that the quantities which we have to use to represent the probabilities are not the usual real numbers we use for probabilities in our everyday world, but complex numbers which are called probability amplitudes. Feynman avoids exposing the reader to the mathematics of complex numbers by using a simple but accurate representation of them as arrows on a piece of paper or screen. (These must not be confused with the arrows of Feynman diagrams which are actually simplified representations in two dimensions of a relationship between points in three dimensions of space and one of time.) The amplitude-arrows are fundamental to the description of the world given by quantum theory. No satisfactory reason has been given for why they are needed. But pragmatically we have to accept that they are an essential part of our description of all quantum phenomena. They are related to our everyday ideas of probability by the simple rule that the probability of an event is the SQUARE of the length of the corresponding amplitude-arrow.

The rules as regards adding or multiplying, however, are the same as above. Where you would expect to add or multiply probabilities, instead you add or multiply probability amplitudes.

How are two arrows added or multiplied? (These are familiar operation in the theory of complex numbers.) The sum is found as follows. Let the start of the second arrow be at the end of the first. The sum is then a third arrow that goes directly from the start of the first to the end of the second. The product of two arrows is an arrow whose length is the product of the two lengths. The direction of the product is found by adding the angles that each of the two have been turned through relative to a reference direction: that gives the angle that the product is turned relative to the reference direction.

That change, from probabilities to probability amplitudes, complicates the mathematics without changing the basic approach. But that change is still not quite enough because it fails to take into account the fact that both photons and electrons can be polarised, which is to say that their orientation in space and time have to be taken into account. Therefore P(A to B) actually consists of 16 complex numbers, or probability amplitude arrows. There are also some minor changes to do with the quantity "j", which may have to be rotated by a multiple of 90º for some polarisations, which is only of interest for the detailed bookkeeping.

Associated with the fact that the electron can be polarised is another small necessary detail which is connected with the fact that an electron is a Fermion and obeys Fermi-Dirac statistics. The basic rule is that if we have the probability amplitude for a given complex process involving more than one electron, then when we include (as we always must) the complementary Feynman diagram in which we just exchange two electron events, the resulting amplitude is the reverse - the negative - of the first. The simplest case would be two electrons starting at A and B ending at C and D. The amplitude would be calculated as the "difference", P(A to B)xP(C to D) - P(A to C)xP(B to D), where we would expect, from our everyday idea of probabilities, that it would be a sum.

in

in

is Vacuum permittivity.

is Vacuum permittivity.